Charles Y. Liu

Nonlinear Dynamical Modeling of Human Intracranial Brain Activity with Flexible Inference

Dec 28, 2025Abstract:Dynamical modeling of multisite human intracranial neural recordings is essential for developing neurotechnologies such as brain-computer interfaces (BCIs). Linear dynamical models are widely used for this purpose due to their interpretability and their suitability for BCIs. In particular, these models enable flexible real-time inference, even in the presence of missing neural samples, which often occur in wireless BCIs. However, neural activity can exhibit nonlinear structure that is not captured by linear models. Furthermore, while recurrent neural network models can capture nonlinearity, their inference does not directly address handling missing observations. To address this gap, recent work introduced DFINE, a deep learning framework that integrates neural networks with linear state-space models to capture nonlinearities while enabling flexible inference. However, DFINE was developed for intracortical recordings that measure localized neuronal populations. Here we extend DFINE to modeling of multisite human intracranial electroencephalography (iEEG) recordings. We find that DFINE significantly outperforms linear state-space models (LSSMs) in forecasting future neural activity. Furthermore, DFINE matches or exceeds the accuracy of a gated recurrent unit (GRU) model in neural forecasting, indicating that a linear dynamical backbone, when paired and jointly trained with nonlinear neural networks, can effectively describe the dynamics of iEEG signals while also enabling flexible inference. Additionally, DFINE handles missing observations more robustly than the baselines, demonstrating its flexible inference and utility for BCIs. Finally, DFINE's advantage over LSSM is more pronounced in high gamma spectral bands. Taken together, these findings highlight DFINE as a strong and flexible framework for modeling human iEEG dynamics, with potential applications in next-generation BCIs.

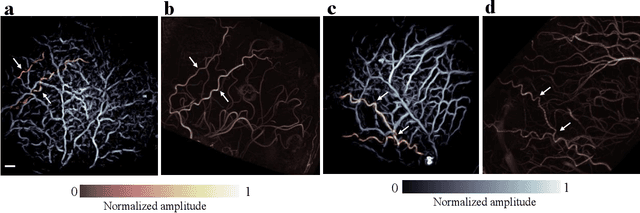

Rotational ultrasound and photoacoustic tomography of the human body

Apr 22, 2025Abstract:Imaging the human body's morphological and angiographic information is essential for diagnosing, monitoring, and treating medical conditions. Ultrasonography performs the morphological assessment of the soft tissue based on acoustic impedance variations, whereas photoacoustic tomography (PAT) can visualize blood vessels based on intrinsic hemoglobin absorption. Three-dimensional (3D) panoramic imaging of the vasculature is generally not practical in conventional ultrasonography with limited field-of-view (FOV) probes, and PAT does not provide sufficient scattering-based soft tissue morphological contrast. Complementing each other, fast panoramic rotational ultrasound tomography (RUST) and PAT are integrated for hybrid rotational ultrasound and photoacoustic tomography (RUS-PAT), which obtains 3D ultrasound structural and PAT angiographic images of the human body quasi-simultaneously. The RUST functionality is achieved in a cost-effective manner using a single-element ultrasonic transducer for ultrasound transmission and rotating arc-shaped arrays for 3D panoramic detection. RUST is superior to conventional ultrasonography, which either has a limited FOV with a linear array or is high-cost with a hemispherical array that requires both transmission and receiving. By switching the acoustic source to a light source, the system is conveniently converted to PAT mode to acquire angiographic images in the same region. Using RUS-PAT, we have successfully imaged the human head, breast, hand, and foot with a 10 cm diameter FOV, submillimeter isotropic resolution, and 10 s imaging time for each modality. The 3D RUS-PAT is a powerful tool for high-speed, 3D, dual-contrast imaging of the human body with potential for rapid clinical translation.

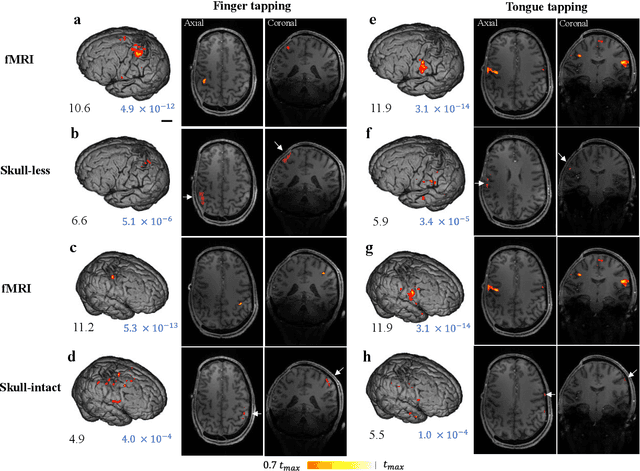

Transcranial photoacoustic computed tomography of human brain function

Jun 01, 2022

Abstract:Herein we report the first in-human transcranial imaging of brain function using photoacoustic computed tomography. Functional responses to benchmark motor tasks were imaged on both the skull-less and the skull-intact hemispheres of a hemicraniectomy patient. The observed brain responses in these preliminary results demonstrate the potential of photoacoustic computed tomography for achieving transcranial functional imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge